© Laurenz Albe 2021

When analyzing customer's problems, I have seen the mess you can get into if you disable autocommit in your interactive client, so I'd like to bring this topic to a wider audience.

What is autocommit?

In PostgreSQL, like in any other ACID-complicant database, each statement runs in a transaction: if the statement causes an error, PostgreSQL undoes all its effects.

You can start transactions explicitly using the START TRANSACTION statement (or BEGIN in PostgreSQL). However, if you don't do that, different RDBMS' behave differently:

- some, like Oracle, implicitly start a transaction when you issue the first statement, and you have to run a

COMMIT statement to end the transaction - others, like PostgreSQL, run each statement in its own transaction until you explicitly start a transaction

The latter mode is called autocommit mode.

PostgreSQL and autocommit

PostgreSQL operates in autocommit mode, and there is no way to change that behavior on the server side.

Back in 2002, release 7.3 introduced a database parameter, autocommit, that allowed users to turn autocommit mode off. This proved to be a bad mistake: now the effects of SQL statements varied depending on that database setting, which led to all kinds of confusion and problems. Consequently, release 7.4 in 2003 removed that feature again.

Instead, PostgreSQL now implements autocommit mode on the client side. Almost all database clients and APIs offer a way to turn autocommit off. A few examples:

- in

psql, you can run "\set AUTOCOMMIT off" - with JDBC, you can call

java.sql.Connection.setAutoCommit(boolean) - in psycopg2, you can call

connection.set_session(autocommit=True) - with pgAdmin 4, you can click the "down" arrow next to the

icon in the query tool to turn off autocommit

icon in the query tool to turn off autocommit - with DBeaver, you can click the

icon in the SQL editor to disable autocommit

icon in the SQL editor to disable autocommit

Note that in the above list, all clients except for psycopg2 run in autocommit mode by default.

The way that clients disable autocommit is by automatically sending a BEGIN statement before the first statement after the end of a transaction.

The appeal of disabling autocommit

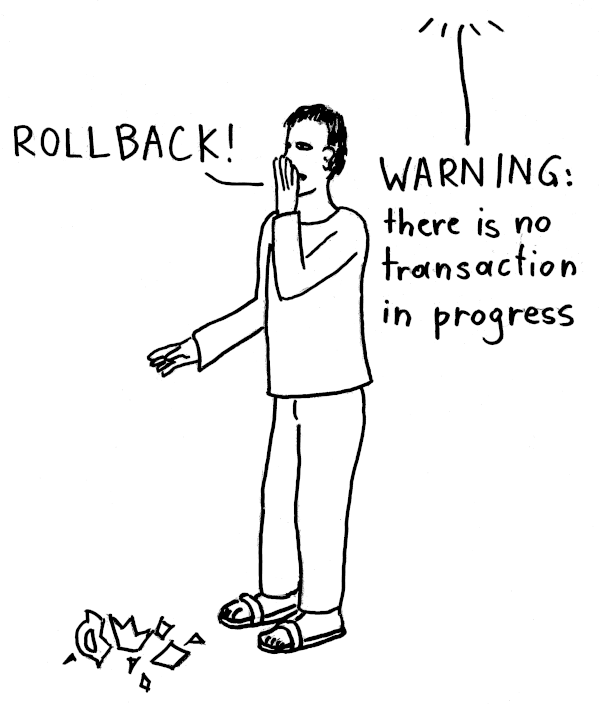

If you are inside a database transaction, nothing much can go wrong: if you make a mistake, you can simply issue ROLLBACK and everything you did is undone. This is appealing because it allows you to recover from mistakes like running a DELETE statement without a WHERE clause.

Moreover, users who are used to database systems that do not operate in autocommit mode (for example, Oracle), like to disable autocommit to get the behavior that they are accustomed to.

This seems to be a safe and useful setting, so many people are surprised to learn that it is a really bad idea in PostgreSQL.

Problems with "autocommit off"

If you disable autocommit, and you don't explicitly commit or roll back, your database session will remain in the state "idle in transaction". Such long-running transactions cause two kinds of problems in the database:

Problem: locks in the database

PostgreSQL holds the locks that SQL statements take until the transaction ends. Now if you ran a data modifying statement after your last commit, some rows in your database are locked. Any other transaction that tries to modify such a row will hang until your transaction is ended.

To make things worse, each SQL statement causes a table-level lock on the tables it accesses: even a "harmless" SELECT will take an ACCESS SHARE on the tables it reads. This is to prevent concurrent data definition statements like DROP TABLE or ALTER TABLE from deleting the table or changing its definition while the SELECT is reading it.

As a consequence, a session with an open transaction can block data definition statements indefinitely long. In a DevOps environment, where new versions are deployed frequently, this will lead to serious disruptions. So even a simple SELECT from a client, with autocommit disabled, can do damage!

Problem: autovacuum maintenance

Whenever you update or delete a row in PostgreSQL, it retains the old version of the row to satisfy concurrent read requests. These old versions soon become obsolete and have to be removed. This is the job of the autovacuum daemon in PostgreSQL. If your database session has a long-running open transaction, it is possible that autovacuum cannot delete any row versions that are younger than the start of your transaction, because you might still need them.

The consequence is that autovacuum keeps running to get rid of those "dead tuples", but it cannot delete them and cannot make any progress. This leads to table bloat, where a table grows out of proportion while containing mostly junk data. Once you have a bloated table, you usually have to take downtime to run VACUUM (FULL) to fix the problem. There are tools like pg_squeeze to make this less painful.

How to defend against "autocommit off"

There are a couple of things that the database administrator can do to mitigate these problems:

- Set the parameter

idle_in_transaction_session_timeout to a value greater than 0. This will automatically cancel all transactions that are idle for longer than the specified time. The transaction is rolled back, and the client receives an error message. - Set the parameter

old_snapshot_threshold to a value greater than -1. Then VACUUM will clean up dead tuples that have been dead for longer than that time, even if a long-running transaction might still need them. If the transaction tries to access a page that has been processed by such a VACUUM, it will receive an error message. Note that this parameter will not prevent idle transactions from holding locks!

While these parameters allow you to prevent the worst, they are "not nice", because they will lead to errors and rollbacks on the client side.

Conclusion

Nice as it may seem, resist the temptation to disable autovacuum in your interactive client. Get used to PostgreSQL's autocommit mode, otherwise you will endanger the health of your database. The administrator can set idle_in_transaction_session_timeout and old_snapshot_threshold to prevent such damage, at the price of causing errors on the client side.

The post Disabling autocommit in PostgreSQL can damage your health appeared first on Cybertec.